Case Study: Automating Weekly Reports for 50 Branches

Case Study: How We Automated Weekly Reporting for 50 Branches, Saving 100+ Hours Per Month

In a multi-location business, manual reporting isn't just a task; it's an operational drag that quietly erodes efficiency and delays critical insights. For a growing enterprise, the process of chasing down, compiling, and correcting data from dozens of sources can become a full-time job. This case study breaks down how we solved this exact problem by automating weekly reports for a 50-branch retail chain. We'll explore the architecture of a robust, scalable automation solution that transformed their reporting process, resulting in a 98% reduction in manual effort and delivering perfectly accurate reports on schedule, every time.

The Challenge: A System Paralyzed by Data Silos and Manual Toil

Before we dive into the solution, it's crucial to understand the depth of the problem. Our client was stuck in a cycle of inefficiency that was hindering their growth and ability to react to market changes.

Client Profile & Initial State

Our client is a fast-growing retail enterprise with 50 branches spread across the APAC region. Their success was creating a significant operational challenge: a completely manual weekly sales and operations reporting process that was buckling under its own weight.

During our initial consultation, we identified several key pain points:

- Data Fragmentation: Each of the 50 branch managers submitted their weekly reports via email. The data was locked in spreadsheets with inconsistent formatting, column names, and even calculation methods.

- Extreme Time Sink: Branch managers, who should have been focused on sales and team leadership, were spending over 2 hours every week manually compiling this data. Across 50 branches, this added up to over 100 hours of lost productivity every single month.

- High Error Rate: With dozens of people manually entering and copying data, errors were inevitable. A single typo or misplaced decimal could skew the entire consolidated report, leading to hours of painful, line-by-line detective work at headquarters.

- Delayed Decision-Making: Due to the lengthy consolidation process, senior management wouldn't receive the final weekly report until Wednesday afternoon. This two-day lag made it impossible to implement agile, data-driven strategies for the current week.

- Lack of Scalability: The company had plans to open 20 more branches, but their current system couldn't handle the load. Onboarding a new branch meant compounding the existing problem, adding more hours and more potential for error.

Solution Architecture: Applying Software Engineering Principles to Business Process Automation

To solve this, we didn't just build a simple script. We approached the challenge as a software infrastructure design problem. Our goal was to create a system that was not only efficient but also clean, maintainable, and ready to scale for the next 100 branches.

Foundational Principles: Building for a Lean and Robust Future

Our philosophy is that business process automation should be built with the same rigor as a software application. We applied several core software engineering principles to guide our architecture.

- Separation of Concerns: We broke the monolithic task of "creating reports" into distinct, independent modules: one for ingesting data, one for processing it, and one for generating the final report files. This makes the system easier to debug and update.

- Open-Closed Principle: The core system was designed to be closed for modification but open for extension. This means we can easily add a new branch, a new data source, or a new report type without having to rewrite the existing, stable logic.

- Modularity: Using a node-based tool like N8N was key. It allowed us to build reusable components (nodes) and sub-workflows that could be called upon by the main process, similar to functions in a programming language. You can learn more about these foundational concepts in Martin Fowler's guide to software design principles.

The Technology Stack: The Right Tools for a Scalable Pipeline

Choosing the right tools is essential for building a reliable data pipeline. Our stack was selected for its flexibility, power, and ability to ensure data sovereignty.

- Automation Engine: We chose N8N for its powerful node-based visual workflow builder. Its flexibility with APIs, custom code nodes, and self-hostable nature made it the perfect engine for this project.

- Data Staging: A centralized, managed PostgreSQL database was set up to act as the single source of truth. All raw data was cleaned and standardized before being written to this database, ensuring data integrity for the processing stage.

- Data Connectors: We implemented a combination of email webhooks (to automatically catch incoming report emails), API calls, and secure file transfer protocols (SFTP) to create a flexible ingestion system that could accommodate various branch capabilities.

The N8N Workflow Architecture: A Step-by-Step Breakdown

The magic happens within the N8N workflow, which orchestrates the entire process from start to finish. Here’s a high-level look at how it works.

Image by Resource Database

Step 1: Ingestion & Standardization Pipeline

The process kicks off automatically every Monday morning. The main N8N workflow fetches raw data from all 50 sources concurrently. Each data file is then passed to a dedicated "Standardization" sub-workflow. This sub-workflow is a critical component that:

- Cleans the data (e.g., trims whitespace, corrects common typos).

- Validates it against a predefined schema.

- Transforms it into a single, unified format.

- Writes the clean, standardized data to the staging database.

Step 2: Core Aggregation & Processing

Once all branch data is standardized and stored, a central processing workflow is triggered. It queries the staging database to pull all the clean data for the week. This workflow performs all the heavy lifting, calculating key metrics, and creating both branch-level summaries and HQ-level aggregations.

Step 3: Modular Report Generation

With the data processed, the workflow iterates through a list of all branches and stakeholders. For each one, it calls a reusable "Report Generation" sub-workflow. This modular approach is key; we pass in the relevant data, and the sub-workflow generates a beautifully formatted, customized PDF or Excel report. If HQ decides they want a different chart on the report, we only need to update this one sub-workflow.

Step 4: Intelligent Distribution & Archiving

The generated reports are automatically distributed via email to the correct branch manager and key stakeholders at headquarters. Each email is personalized with a brief summary. Simultaneously, all reports are systematically named (e.g., Branch_A_Weekly_Sales_2023-10-30.pdf) and archived in a secure cloud storage bucket for historical analysis and compliance.

Step 5: Error Handling & Notifications

A robust automation system must plan for failure. Our workflow has built-in logic to detect issues. If a branch fails to submit its data on time, or if the submitted file is corrupted or invalid, the system doesn't crash. Instead, it automatically sends a notification email to that specific branch manager with clear instructions, while also alerting our support team. The process continues seamlessly for all other successful branches.

The Results: A Measurable Leap in Operational Efficiency

The implementation of this automated reporting system delivered a transformative impact on the business, with both quantitative and qualitative benefits.

Quantitative Impact

- Time Reclaimed: Over 100 man-hours were saved every single month. This freed up branch managers to focus on high-value activities like coaching their teams, improving customer service, and driving sales.

- Accuracy Achieved: By eliminating manual data entry and consolidation, reporting errors were reduced by nearly 100%. This fostered a newfound trust in the data across the organization.

- Speed to Insight: Consolidated, accurate reports are now waiting in every manager's inbox at 9:00 AM every Monday. This allows leadership to review weekend performance and plan the week ahead proactively.

Qualitative Benefits

- Enhanced Data Governance: The company now has a single, clean, and reliable source of truth for all operational reporting, which is invaluable for strategic planning.

- Effortless Scalability: The modular architecture means onboarding a new branch is as simple as adding a new entry to a configuration file. The system is ready to grow with the business.

- Improved Morale: Branch managers expressed immense relief at being freed from a tedious, repetitive, and stressful weekly task.

- Data-Driven Culture: Access to reliable, timely data has empowered managers at all levels to make faster, more informed decisions, fostering a more agile and responsive culture.

Best Practices: Key Takeaways for Architecting Your Own Automation

Building a successful automation system like this requires careful planning. Based on our experience, here are the key best practices to follow if you're considering a similar project. For a deeper dive, check out our guide on choosing the right automation platform.

- Design Before You Build: Treat your automation workflow like a software application. Before writing a single node, map out the entire process. Define your data sources, the logic flow, how data will be transformed, and how you'll handle exceptions. A clear blueprint saves countless hours of rework.

- Standardize Data Relentlessly: Your automation is only as reliable as the data it receives. The most critical step is enforcing a strict, standardized data schema at the very beginning of your pipeline. Garbage in, garbage out.

- Embrace Modularity: Don't build one giant, monolithic workflow. Break down complex processes into smaller, reusable, single-purpose sub-workflows. This dramatically improves readability, maintainability, and makes debugging a breeze.

- Build Resilient Error Handling: Don't assume every step will succeed. Plan for API failures, network timeouts, missing data, and invalid formats. A truly robust system anticipates these issues and handles them gracefully without bringing the entire process to a halt.

- Separate Your Engine from Your Logic: Use a dedicated, reliable platform for running your automations. Trying to manage your own server, security patches, and software updates can quickly become a second full-time job, distracting you from the real goal: building efficient business processes.

What's the one manual reporting task in your business you'd love to automate? Share your thoughts in the comments below!

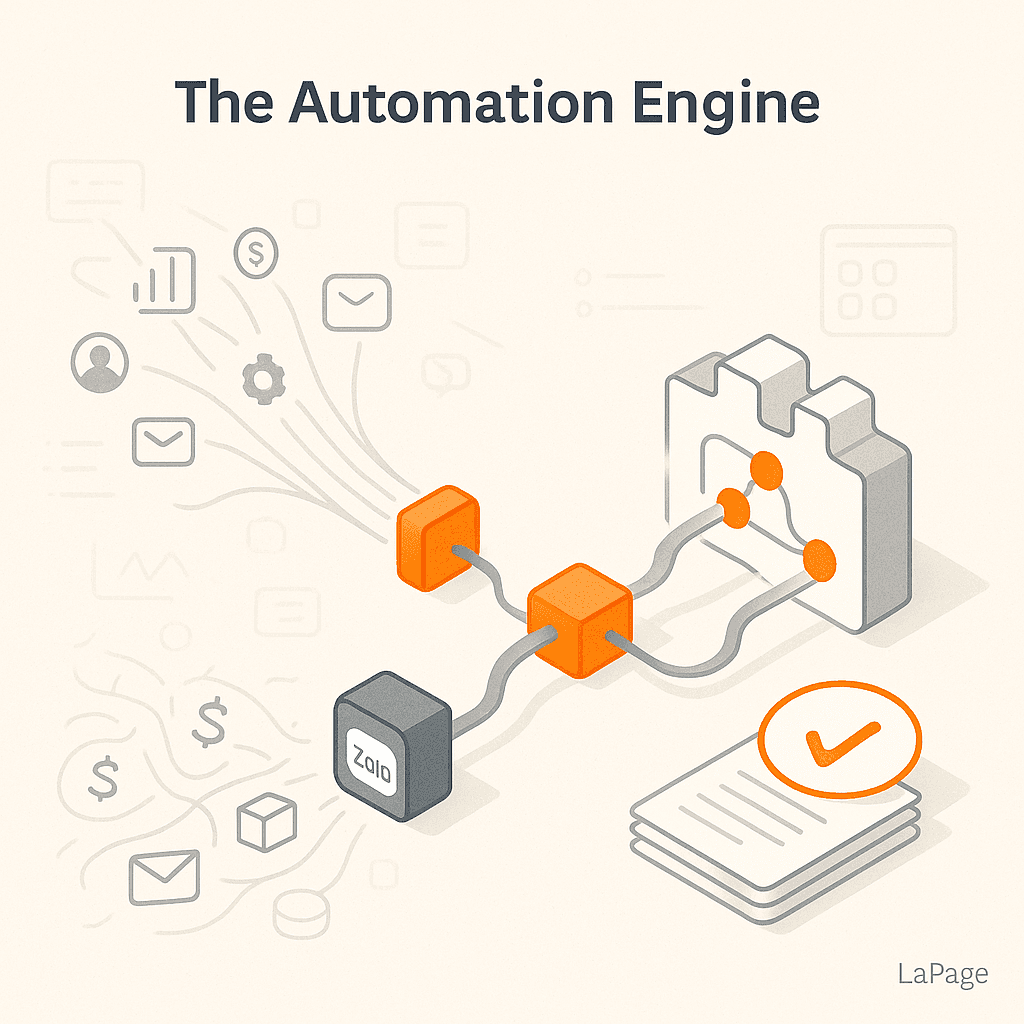

Ready to Build Your Own Scalable Automation Engine?

The power of the solution described lies in its robust workflow logic, running on reliable, managed infrastructure. Managing the security, uptime, and updates for an automation platform like N8N can be a significant technical burden, distracting you from what truly matters: building efficient processes that drive business value.

Focus on your automation, and let us handle the infrastructure. LaPage provides a fully-managed, high-performance N8N hosting environment, optimized for the demands of businesses in the APAC region. Get the rock-solid foundation you need to build scalable, enterprise-grade workflows just like this one.

Frequently Asked Questions

What is N8N?

N8N is a free and open-source workflow automation tool. It uses a visual, node-based editor that allows you to connect different applications and services (like Google Sheets, Slack, databases, and thousands of APIs) to create powerful, automated workflows without extensive coding knowledge. We provide fully managed N8N instances so you can focus on building automations, not managing servers.

Can this automation work for other types of reports?

Absolutely. The principles and architecture discussed in this case study are highly adaptable. The same modular design can be used to automate financial reports, marketing analytics, inventory summaries, HR onboarding tasks, and virtually any other process that involves gathering, processing, and distributing data from multiple sources.

How much does a custom automation solution like this cost?

The cost of an automation project depends on the complexity of the workflows, the number of systems being integrated, and the volume of data. However, the return on investment (ROI) is often significant and rapid, especially when you factor in the reclaimed employee hours, reduction in costly errors, and the value of timely, data-driven decision-making.

What if my data sources don't have APIs?

This is a common challenge. The N8N platform is extremely flexible and can handle various data ingestion methods beyond APIs. As shown in the case study, we can use email webhooks to parse attachments, SFTP to retrieve files from servers, and even set up web forms for manual data entry into a standardized system. There is almost always a way to connect your data.

LaPage Digital

Passionate about building scalable web applications and helping businesses grow through technology.

Related Articles

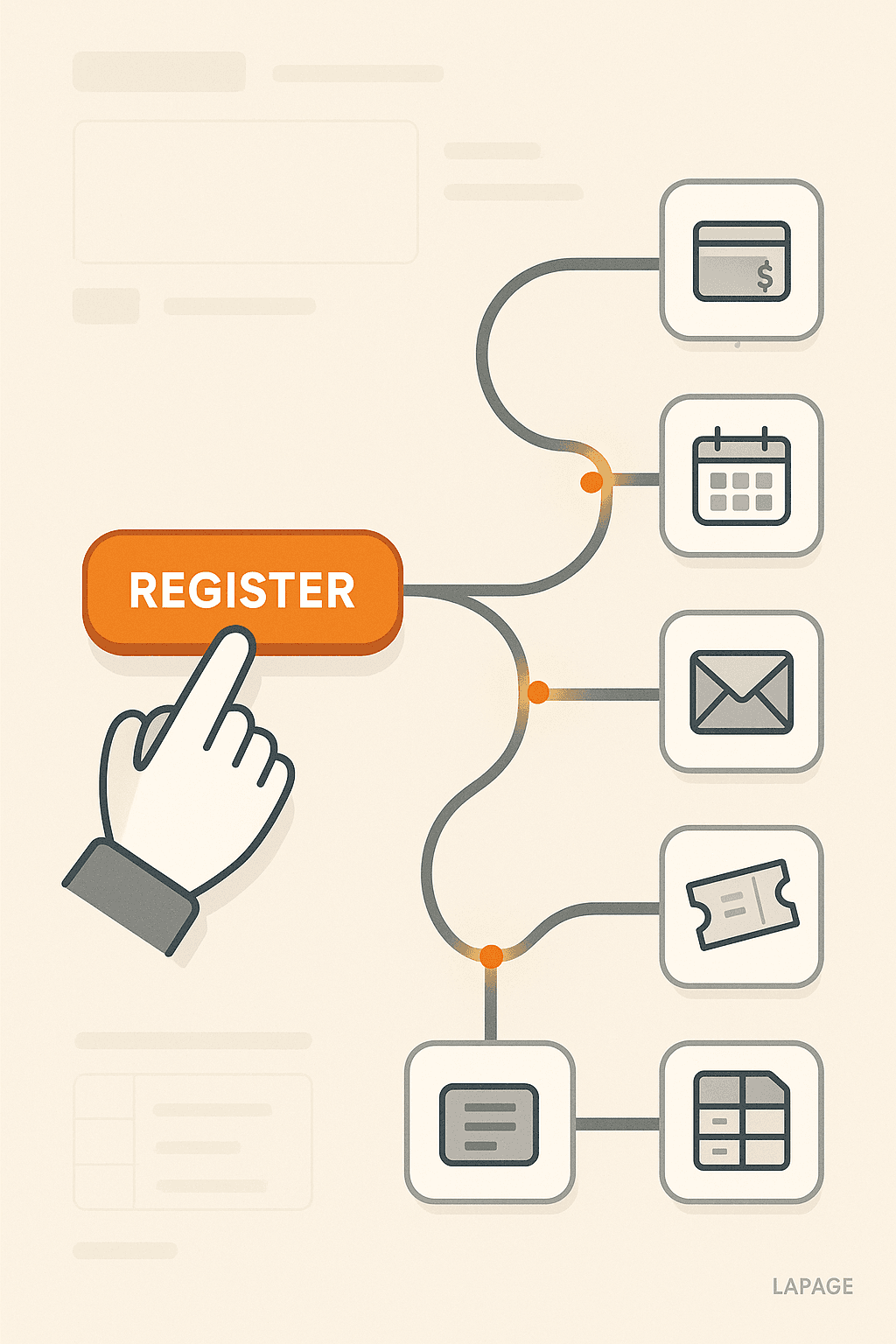

Real-Life Example: Automating Event Registrations

Customer Spotlight: Marketing Manager’s Success Story

5 Unexpected Ways Our Clients Use Workflow Automation

How Company X Cut Data-Entry Time by 80% with N8N

Subscribe to Our Newsletter

Get the latest articles, tutorials, and updates on web development and hosting directly to your inbox.